Google Cloud Build decrypting KMS Secrets

In trying to follow more best practices and create a true reference architecture for Java in GCP I was trying to store my service account credential files encrypted using KMS then pull them out and decrypt them using using GCP KMS Service. In doing so though I kept getting an error through Cloud Build saying I didn't have decrypt permissions.

It pains me to admit that this took me much longer to solve than it should have, mostly because of a deeply rooted I.D.ten.T. error.

I started this process by following most of the excellent guide I found on a post on Medium - Using GCP's Cloud Key Management Serice (KMS) to Encrypt/Decrypt Secrets.

In an earlier post I mentioned that I was storing my credentials file in a cloud storage bucket unencrypted. Not great, right? Changing that to storing an excrypted file was actually quite easy using GCP's KMS system.

The first thing one should do is create a new key-ring and new key - keep in mind that key-rings cannot be deleted

gcloud kms keys create datastore-key \

--location global \

--keyring {your_keyring_name} \

--purpose encryption

Then we'll create a new key and encrypt our plaintext (credetial) file into a new ciphertext (encrypted credential) file to upload into our cloud storage bucket. If you saw my previous post, you may know that the file I'm working with is a service account credentials file I created to allow StackDriver logging to be done through my cloud run application. This file is being pulled down via one of my build steps. But now, before uploading it, I want to encrypt it.

gcloud kms encrypt --location=global \

--keyring=development-key-ring \

--key=datastore-key \

--plaintext-file=logging_service_account.json \

--ciphertext-file=encrypted_logging_service_account.json

The command above uses the key-ring we previosuly created, uses a new key of "data-store" and encrypts the local logging_service_account.json to a new file called encyrpted_local logging_service_account.json. This encrypted file is what I'll now put in my storage bucket to be pulled, decrypted, and injected into my cloud run container via cloud build.

# Pull the credentials file from our storage bucket

- name: 'gcr.io/cloud-builders/gsutil'

args: ['cp', 'gs://{bucket_name}/encrypted_logging_service_account.json', './src/main/jib/cred']

# decrypt the credentials file that we just pulled down

- name: 'gcr.io/cloud-builders/gcloud'

args: ['kms', 'decrypt', '--location', 'global', '--keyring', 'development-key-ring', '--key', 'datastore-key', '--ciphertext-file', './src/main/jib/cred/encrypted_logging_service_account.json', '--plaintext-file', './src/main/jib/cred/logging_service_account.json']

Notice that the decrypt step, the second one, is virtually a reversal of the previous encrypt step.

Now we should be good to go. Run our cloud build and all is right with the world.... Until we get a permissions issue.

ERROR: build step 1 "gcr.io/cloud-builders/gcloud" failed: exit status 1

ERROR

Finished Step #1

Step #1: ERROR: (gcloud.kms.decrypt) PERMISSION_DENIED: Permission 'cloudkms.cryptoKeyVersions.useToDecrypt' denied for resource 'projects/inventory-dev-project/locations/global/keyRings/development-key-ring/cryptoKeys/datastore-key'.

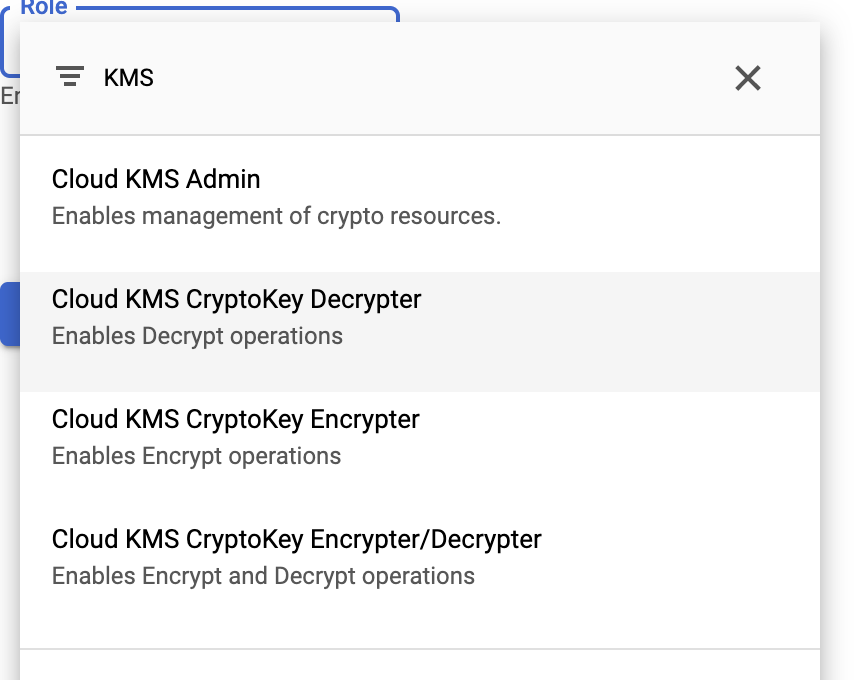

In my trial and error though I did learn that I can also do this via GCloud commands:

gcloud kms keyrings add-iam-policy-binding \

--location=global development-key-ring \

--member=serviceAccount:{unique_id}@cloudbuild.gserviceaccount.com \

--role=roles/cloudkms.cryptoKeyDecrypter

So there you have it. Encrypting a file, putting it in cloud storage, pulling it during cloud build, decrypting it, and injecting it into our container to be used at runtime.

Some other things I learned and next steps:

1. Determine what service account is the GCP running process under

When debugging and trying to figure out why my service account constantly got permission denied even though I kept adding the permissions I realized I was working with the wrong service account. I was able to figure this out with a simple step in my cloud build that told me who the agent was running as:

- name: 'gcr.io/cloud-builders/gcloud'

args: ['auth', 'list']

which outputted:

* {unique_project_id}@cloudbuild.gserviceaccount.com

ACTIVE ACCOUNT

Credentialed Accounts2. Next Step Encrypt the Storage Bucket and not the file itself

I believe it would be beneficial to encrypt the bucket and everything that goes into it so that everything is automatically encrypted. This ensure much better security.

Comments